AI is helping organizations become more innovative and become stronger in the marketplace. But in the excitement of gaining new technological innovation capabilities, IT leaders cannot lose sight of two critical, but often ignored, aspects of AI — ethical practices and data privacy.

According to The 2024 Global AI Report, the “rapid commoditization of AI technologies, while democratizing access to information and fostering innovation, also opens organizations to inherent risks, highlighting the need for the adoption of responsible AI practices.”

AI’s ease and speed means it can be rapidly integrated into multiple areas of business operation. While speed is always a coveted quality in the business world, the rapid expansion of AI carries the risk for unintended consequences, such as unethical use and data exposure.

The global survey of over 1,400 IT decision-makers also reported that 39% of respondents say that ethical AI practices and data privacy are crucial on the IT side of the house and must factor heavily into AI deployment decisions. Further, they emphasize that AI adoption must equally account for the well-being of the company, employees and customers.

But how can today’s organizations achieve the goals of ethical AI and data privacy? The Foundry for AI by Rackspace (FAIR™) has an answer based on deep experience and practice.

Responding to a responsible AI mission

Like most new technologies and inventions, AI technologies can be used for the good of humanity, or a few bad actors could use it for nefarious reasons. For example, since the advent of nuclear technologies around the time of World War II, we’ve seen how some world bodies have struggled to keep the lid on the proliferation of nuclear technologies, while, at the same time, harnessing its power for the good of society.

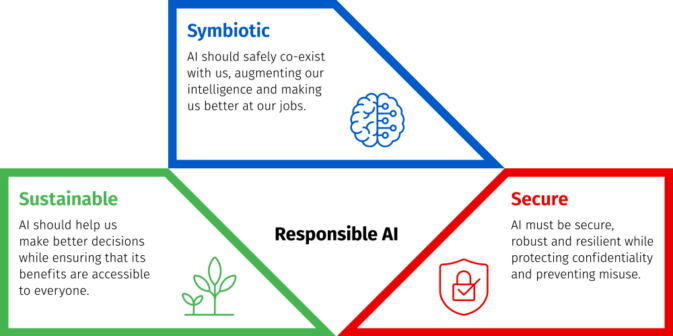

AI technologies are still in their infancy but are evolving faster than most other new technologies have historically. To help keep AI as a force for good versus evil while it’s moving at lightning speed, it’s important that governments, societies and organizations formulate guidelines and guardrails around innovation and AI technology use now. As companies embrace an AI-driven future, it’s critical that they also ensure their AI practices and solutions are symbiotic, secure and sustainable. This requires a responsible approach.

Responsible AI refers to the development, deployment and use of AI in ways that are ethical, trustworthy, fair, unbiased, transparent, and beneficial to society and individuals. It requires a focus that spans innovation, productivity and technology. The goal should be to use AI as a decision-support system, not as a decision-maker by focusing on three dimensions of AI: symbiotic, secure and sustainable.

Supporting the responsible adoption of AI with FAIR

FAIR helps you accelerate the adoption of responsible AI — no matter your industry or needs. FAIR represents the considerable thought and effort we’ve put into developing an approach to AI adoption and integration that will help us, our customers and the world contribute to using AI for the good of humanity. From the processes of conducting in-depth research, multiple leadership discussions and building our own ethical AI practice, we’ve created a philosophy and a structure to help guide the future of responsible AI within every organization.

Our goal is to help ensure that, as AI becomes increasingly integrated into our daily lives and economic structures, we’re harnessing its maximum potential risks while meticulously managing risks and helping to ensure that the benefits are widely distributed.

Here is a high-level view of the FAIR approach to responsible AI and our belief in AI solutions that are symbiotic, secure and sustainable.

Symbiotic AI: AI solutions should be a natural extension and evolution of existing the technology systems. In other words, they must be explainable, accountable and transparent. AI solutions are only as good as the data used to train the models, and legacy data inherently has biased human decisions. It’s important for organizations to verify their data used to train models to ensure that it supports fair outcomes.

Along with proper data ingestion, AI solutions require built-in safeguards that can be used to help explain decisions made by the systems and humans. To achieve this, AI needs to be:

- Explainable: At any given instance, decisions that are made by autonomous systems or used in human decision making should be explainable and include logic and data that supports the decisions.

- Accountable: Each step of decision-making must be logged with data and logic such that they can be audited at appropriate levels, as needed.

Secure AI: With the digitization of all aspects of our life, it’s imperative for AI solutions to ensure data privacy and objectivity. To this end, AI systems should be built in ways that support privacy, resiliency and compliance.

- Privacy: AI systems must ensure all privacy norms in practice and in law are implemented from the beginning and not retrofitted as an afterthought. This means that systems must be designed, built and reviewed with all relevant security and privacy practices from the very beginning.

- Resiliency: AI solutions, like your other mission-critical applications and systems, must be built to recover from malicious attacks quickly.

- Compliance: Regulations around AI systems are still nascent but evolving rapidly, and organizations are responsible for ensuring that they comply with all applicable regulations. This is particularly important given the global nature of AI solutions and the divergent regulatory requirements. To address compliance mandates successfully when facing vague or imprecise guidance, it’s a good practice to choose the highest and strictest levels of governance versus a minimalistic approach.

Sustainable AI: AI solutions help humans make better decisions while ensuring their implementation is equitable and democratized with minimal environmental impact. While there is no single universal yard stick, it’s important that societies and organizations develop a point of view and refine it as AI-driven technologies mature. In this regard, aim to achieve these three outcomes:

- Equity in data usage: Create AI solutions using unbiased data to prevent systemic biases in the AI solutions. This ensures that the decisions made by AI are fair and just for all users.

- Democratization of knowledge: Empower global knowledge sharing by developing AI technologies with a commitment to serve humanity. This approach enhances access to information and resources across diverse populations.

- Environmental responsibility: Design AI systems to be energy efficient and minimize carbon footprint. It’s crucial that AI development considers ecological impacts, striving for sustainability in technology usage.

Establishing AI governance to support business goals

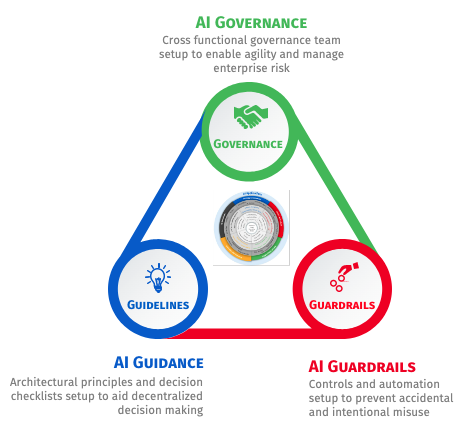

To effectively integrate AI solutions that are symbiotic, secure and sustainable, you’ll need robust governance policies that reflect your strategic goals. To establish such policies, you’ll need to develop clear guidelines and practices for AI usage that span governance, guidance and guardrails. Here’s how organizations can implement these critical functions:

- AI governance: Codify enterprise-wide AI development guidelines at the outset of your AI journey. These guidelines should address usage, privacy and security, and be enforced by a cross-functional team with the necessary authority and institutional knowledge. Some key areas to focus on include AI use, executive sponsorship, business ownership, AI literacy and awareness.

- AI guidance: Implement guidelines that include architecture principles covering platform, data, security, and privacy, along with use-case selection criteria to support decentralized decision-making. Ensure these guidelines are enforced by an authoritative cross-functional team.

- AI guardrails: Define processes and deploy tools that ensure that your AI applications adhere to established guidelines and deter misuse — whether intentional or accidental.

The good news is that many organizations are already on the right track when it comes to responsible AI deployment. Our 2024 report found that, as companies increasingly integrate AI across functions, many of them are doing a good job of prioritizing ethical governance, including governance focused on explainability and fairness (54%), data security (51%) and oversight (47%).

To encourage more organizations to embark on this journey of responsible AI, it is essential for them to commit to the well-being of both AI-driven technologies and the people who benefit from them.