As interest in AI has exploded over the past year — and with the recent launch of Foundry for AI by Rackspace (FAIR®) — Rackspace Technology® needed a way to educate employees (Rackers) quickly on the human and ethical aspects of AI practices so they can interact with AI safely and responsibly.

Rackspace University’s technical training team, understanding the urgent need for AI training, swung into action and developed a live, two-hour digital workshop on AI ethics and fundamentals in just 16 days. Now, Rackers around the world are being trained on how AI technologies work, the ethical challenges AI presents, and how to traverse the human-AI nexus.

AI elicits excitement — and anxiety

The explosive growth of generative AI technologies has created excitement as well as concern about how AI could impact human lives. Not only are AI technologies powerful — they could also be pervasive. We already use AI and machine learning technologies every day. They power smartphone voice assistants, provide product recommendations, filter spam, evaluate medical images and generate code that will run future applications, websites and devices. Their use will expand exponentially in the very near future.

Recognizing that it’s the unknown that elicits trepidation, Rackspace University prioritized AI training development. Our goal was to help all Rackers, no matter their technical background, understand how AI/ML technologies work and how AI might impact them. Our priority was to ensure everyone understands the ethics surrounding these new technologies.

About our new AI Ethics in Action training program

The Rackspace University technical training team led development for the new AI Ethics in Action training program. Thanks to our team’s background in onboarding and upskilling training for cloud engineers, we were uniquely positioned to move quickly on AI training development. We hold numerous certifications in cloud computing and data engineering from Amazon Web Services (AWS), Google Cloud and Microsoft® Azure®. We also have experience in networking, security and technology outreach, along with academic research and teaching experience related to AI/ML. The new course kicks off with an overview of machine learning methods and how AI is possible thanks to machine learning’s ability to find patterns in large data collections.

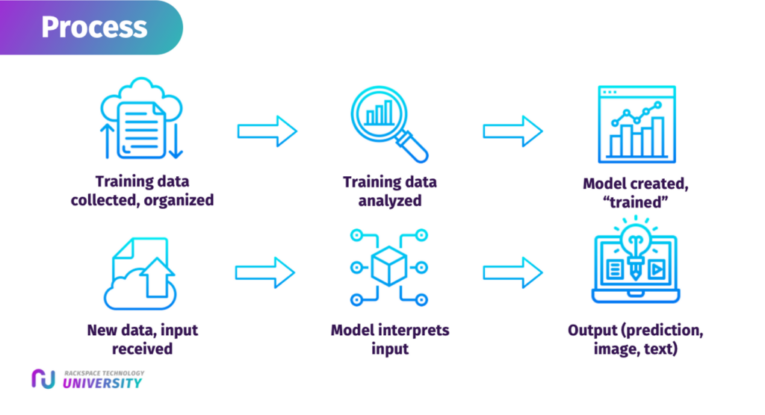

Below is an illustration from the training that shows how AI tools are built in six steps. AI is trained using machine learning methods to find data trends and produce models, which are equations that use the trends to make mathematical predictions about the future. These predictions can be used to:

- Translate internet user inputs or messages

- Produce outputs like text, images and/or video

- Make decisions, such as whether or not an email is spam

Rackers leverage their understanding of this process to examine real-world AI ethics challenges, for example, instances where AI, by fault or unintended consequences, negatively impacts human lives. When Rackers learn about the potential issues, they can reflect on which steps in the AI process could have caused something to go wrong.

Rackers also formulate questions they can use to evaluate AI tools and outputs. This helps them see how they can avoid creating similar problems through their own use of AI. This high-level understanding of AI allows them to identify possible dangers and avoid them.

Understanding AI complexities and ethical challenges

During course creation, we decided not to provide learners with a simple list of AI ethics dos and don’ts. In the face of a rapidly evolving new technology, our aim was to train Rackers to think critically about AI, to ask questions and to make responsible decisions in novel situations.

Our course includes real-world AI situations that demonstrate how the complexities in AI can present ethical challenges across a variety of applications and impact people in many ways, for example:

- AI chat tools can compromise private information and may provide incorrect, biased or offensive outputs.

- AI may discriminate when making hiring decisions if groups of people are underrepresented in the data.

- AI technologies like facial recognition may cause accuracy issues to remain undocumented.

- Inaccurate AI models can pose specific challenges regarding equity, diversity, inclusion and belonging to groups that are historically underrepresented within a population.

As Rackers examine instances where AI has negatively impacted humans, we offer three lessons to draw on. If you’re developing AI training at your organization, or if you simply want to work with AI tools more responsibly, we encourage you to also embrace these lessons.

- Verify accuracy and privacy: Among the many ethical challenges with AI is that its outputs can produce inaccurate information. Also, user input into an AI tool could compromise personal information or confidential data. That’s why whenever you use an AI tool like , Google Bard, or GitHub’s Copilot code generation tool, it’s crucial to independently evaluate the AI outputs for accuracy and ensure you have not sent any private data to the AI system.

- AI is built on past data: Because AI tools are trained by accessing past trends, their outputs can reproduce those trends. This means AI can discriminate against groups that are underrepresented in training data, or that AI outputs can reproduce biased or offensive content within the training materials. When you use AI, it is essential to think about which negative issues from the past might erroneously be reproduced by AI today.

- Think about what you can’t know: The equations that AI technologies employ are profoundly complex. Also, the data used for training is often large enough that no single person could ever evaluate all of it. Further, AI technologies may be proprietary or protected. As a result, there’s a limit to what we can know about how an AI system works. Always consider what you can’t know and understand how that might impact your output.

Since 2009, Rack Gives Back and the Rackspace Foundation have donated more than $12 million to non-profit organizations and schools in Rackers’ own communities. Owing to the history and culture of Rackspace Technology, we are happy to see that ethics, responsibility and putting people first are the hallmarks of our approach to AI as well.

If your organization is considering implementing AI tools or developing AI training, we urge you to adopt a similar approach. AI/ML are positioned to be transformative technologies. Keeping ethics and people in mind as we use and develop these technologies ensures that we minimize harm to individuals and communities. It also helps ensure that AI is designed, deployed and used in ways that benefit human beings.

Discover the transformative potential of AI

Your AI journey starts with our FAIR Ideate. Take the next steps toward secure and sustainable AI adoption today.