Ever since ChatGPT stormed onto the market in November 2022, the world of generative AI has been nothing short of sensational! Through a simple interface, you now hold the power to chat with and control a generative model, instructing it to perform various tasks like answering questions, generating content, summarizing, and more, scaling human abilities.

The generative AI models have proven their massive potential in augmenting workforce capabilities and boosting employee productivity across diverse domains such as marketing, research, software development, and beyond. McKinsey’s “The economic potential of generative AI: The next productivity frontier” reports that a staggering 60-70% of work hours are spent on tasks that generative AI can augment, bringing forth a $2.6 trillion USD to $4.4 trillion USDworth of annual global productivity.

Business and technical decision-makers have been eager to embrace this new revolution in their enterprises, reaping its incredible benefits. However, this enthusiasm has been accompanied by valid concerns regarding the security and privacy of sensitive enterprise data when transmitted to external servers hosting such models, resulting in some organizations restricting or outright banning ChatGPT and similar services. According to The Washington Post, corporate leaders increasingly worry that employees will spill corporate secrets. And even some of the largest tech companies are placing restrictions on employee usage of chatbots.

Fortunately, many cloud providers have stepped up to address these concerns by empowering organizations to harness cutting-edge generative models within the secure confines of their own accounts. Cloud providers, such as Amazon Web Services, Microsoft Azure and Google Cloud, now bring the prowess of generative AI to their clients under the same Service Term Agreements, guaranteeing that organization data will not be used for model training or other purposes and ensuring industry-leading security and compliance, mitigating the risks associated with data exposure.

This article delves into the considerations for enterprises looking to adopt powerful chatbots powered by generative AI models, specifically, text-generation Large Language Models (LLMs), using their data environments and explores strategies to alleviate any associated concerns.

Let us embark on this transformative journey and unlock the potential of LLM-Powered chatbots for enterprise success.

What is an LLM-Powered Chatbot?

At their core, conventional chatbots function by mapping user messages to a pre-determined list of intents and then funneling these users through conversational pathways that address user requests. This process leads to rigid, predictable and artificial interactions. However, LLM-powered chatbots bring a fresh perspective, enabling them to engage in more human-like conversations. Thanks to the LLM, these bots possess the remarkable ability to assimilate data from diverse sources, empowering them to respond with contextual depth and relevance. This remarkable advancement enables users to converse with their data and opens the possibilities for enhancing user experience and augmenting human abilities.

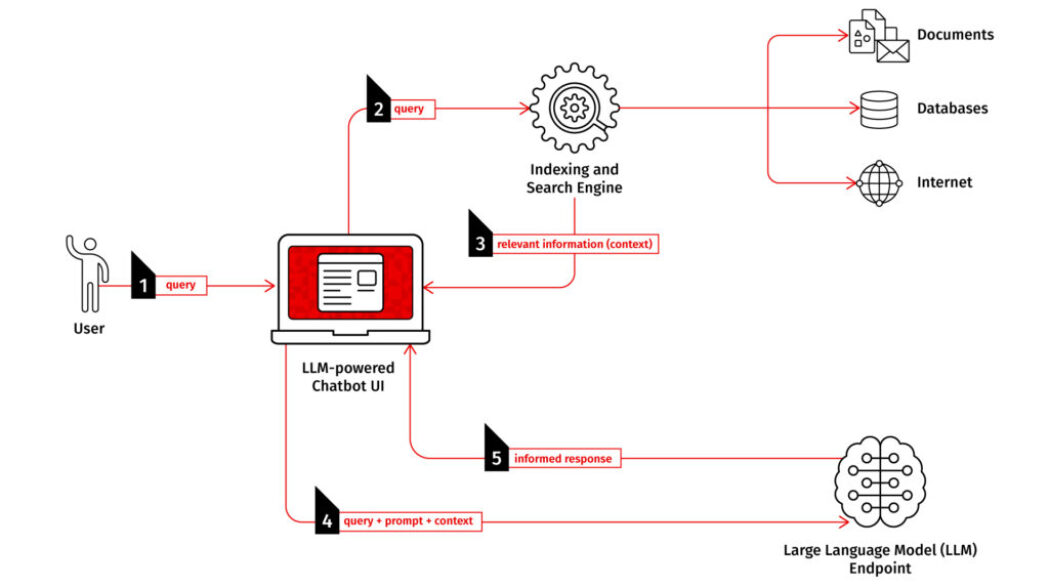

The accompanying figure showcases a basic LLM-powered chatbot that augments its responses with data ingested from multiple sources using Amazon Kendra, a powerful knowledge management service, empowering the chatbot to deliver well-informed and accurate responses. This augmentation process is known as the Retrieval Augmented Generation (RAG) method.

Yet, is this all it takes to harness the true potential of these advanced bots for your enterprise?

For those considering integrating LLM-powered chatbots into their enterprise, the journey is not as straightforward as it might seem. While cloud providers offer the tools, enterprises must contemplate various factors to ensure the success of these chatbots in their organizations.

So, what are the considerations that enterprises ought to take into account when embarking on this transformative endeavour?

The answer lies in understanding key enterprise characteristics that impact enterprise data and their machine learning landscapes. Then, we embark on a thoughtful exploration and understanding of how to handle these implications to harness the true power of LLM-powered chatbots within your organization chatbot to deliver well-informed and accurate responses.

What are the characteristics of an enterprise?

Enterprises are large national or international organizations known for their significant scale and extensive reach. They possess several defining characteristics that set them apart in the business landscape and underpin the considerations within this article:

Sizeable and Diverse Workforce with Complex Organizational Structures: Enterprise organizations boast a substantial and diverse workforce, comprising individuals from various cultural backgrounds and possessing diverse skill sets. Enterprise organizations often promote specialization and division of labour to manage their operations efficiently. Different departments or teams focus on specific tasks or aspects of the business, leading to complex hierarchies and organizational structures.

Sizeable and Diverse Customer Base: Enterprises serve a broad and diverse customer base, catering to consumers, businesses, governments, or a combination of these entities. Many enterprises operate globally, maintaining offices, facilities, or operations in multiple countries, leading to engagement with various regulatory and cultural challenges.

Regulatory Compliance and Governance: Owing to their size and market influence, enterprises must adhere to various regulations and corporate governance standards, spanning financial reporting, environmental compliance, labour laws, and more.

Formalized Decision-Making Processes: These organizations’ sheer scope and presence demand operational standardization to enhance efficiency while reducing errors. This standardization also affects decision-making processes, leading to a formalized process involving both business and technical decision-makers and requiring them to collaborate in articulating business problems and related KPIs to justify the adoption of new technologies. However, this can sometimes create challenges, causing slower technology adoption due to the more meticulous decision-making process.

As business and technical decision-makers, what factors should you contemplate for an Enterprise-Grade LLM-powered chatbot?

What are the enterprise considerations when implementing a LLM-chatbot?

When it comes to implementing an LLM-powered chatbot in your enterprise, several essential considerations arise concerning your data, the application, integration, automation, adoption, and ROI.

Solution Buy-in and Adoption

With enterprises diverse users, complex organizational structures, and established processes, adopting new technologies internally often faces challenges, requiring a business case to be made for this new solution articulating how it affects the bottom-line to obtain executive buy-in before the projects starts, and conducting a launch plan involving marketing, training, etc. after completing the solution.

Considerations include:

1. Identifying the roles, functions, individuals, and organizational structures most impacted by this solution.

2. Identifying the business processes that this solution augments/improves.

3. Measuring the existing process performance in terms of enterprise KPIs.

4. Estimating the solution’s performance using the same KPIs.

5. Estimating the solution’s development, operation and maintenance costs.

6. Performing a cost-benefit analysis and estimating the ROI.

7. Planning for the solution rollout, including a launch plan, and training employees if applicable.

Data Consolidation and Governance at Scale

The sheer size of these organizations and their complex hierarchical structures result in large volumes of diverse data that is also volatile. This data is often accessible by specific entities within the organization, which require a robust solution for data consolidation and governance, addressing the following considerations:

1. Enabling strict access controls to specific entities within the organization.

2. Ingesting and consolidate large volumes of diverse and volatile data and make it available to the generative AI in a timely manner.

3. Ensuring data quality.

4. Enforce data privacy, security and other regulations.

5. Tracking data lineage and transformations for debugging and auditing.

6. Monitoring data pipelines, ensuring their reliability, resilience, and operational excellence.

Application Accessibility, Integrate-ability, Security & Regulatory Compliance at Scale

The target users of these chatbots in enterprise setting, whether customers or workforce, are many and diverse requiring enterprises to have in place accessibility, security, and scalability, requirements. Moreover, Enterprises have established processes and solutions in place, which on its own poses a challenge of dealing with fragmented solutions silos. A new solution ought to not add to this complexity by integrating well with existing systems, include data sources, customer identity and access management systems. Seamless integration is crucial for providing accurate and up-to-date information to users. These facts add the following to the list of considerations:

1. Supporting multiple languages and alternative interaction options, such as voice interactions.

2. Ensuring the trustworthiness of the chatbot and establishing guardrails.

3. Implementing customer identity and access management to authenticate users.

4. Enabling scalable concurrent access to the chatbot.

5. Tracking user access and interactions with the chatbot solution in a reliable and scalable manner.

6. Ensuring seamless scalability and elasticity of the LLM endpoint.

7. Ensuring communication security.

8. Monitoring and logging the solution to enable debugging and ensure performance.

9. Integrating the chatbot with existing systems, databases, and applications.

Solution Maintenance and Automation

A chatbot for enterprise will typically be more complex than the simple use case presented above to enable it to address your enterprise use case, the various considerations mentioned above, and cater to the diverse employee and customer base. This complexity, naturally, requires more code for the backend processing and frontend user interfaces, adding challenges to deploying changes to the bot’s code, reducing time to production and increasing error.

Maintaining such a solution via manual processes is error prone and time consuming.

Furthermore, like any machine learning solution, these bots are expected to perform well in production. The fact that these bots are consumer-facing solutions further exacerbate the need for good performance and addressing any performance degradation or safety concerns, which brings about concerns such as the following:

1. Automating code deployment for the various components of this solution that require maintenance and updates.

2. Monitoring the solution performance in production and enabling feedback mechanisms.

3. Setting up appropriate notification system around monitoring.

As we stand on the cusp of a revolution powered by generative AI, enterprises are poised to redefine innovation and efficiency through LLM-powered chatbots. By integrating meticulous planning with advanced technology, businesses can foster a dynamic ecosystem where data-driven-insights meet human expertise. Embrace this transformative journey and unlock the full potential of chatbots in revolutionizing enterprise operations.

Discover the transformative potential of AI

Your AI journey starts with our FAIR Ideate. Take the next steps toward secure and sustainable AI adoption today.